© ipopba – stock.adobe.com

Article • From promise to practice

Healthcare AI's trust issues (and what it takes to overcome them)

The promise of AI in healthcare is enormous – however, most who have actually worked with today's models will agree that the technology is not quite there yet. At MEDICA, a panel of AI experts and healthcare leaders tackled a fundamental question: What stands between AI's current state and its potential as a reliable healthcare companion? The discussion revealed that technical capability alone isn't enough – transforming AI into a trustworthy partner in patient care requires overcoming challenges in education, infrastructure, oversight, and realistic risk assessment.

Article: Wolfgang Behrends

The panellists identified knowledge as the first challenge. Waclaw Lukowicz, co-founder of Key4Lab, identified education as essential to responsible AI adoption. When healthcare professionals understand how AI works, he argued, they develop both the confidence to use it and the judgment to recognize its limitations. This understanding serves as a critical safety mechanism.

Dr. Harvey Castro, Chief AI officer at Phantom Space, illustrated why this matters: AI is a tool, and like a car driven by someone without training, it can endanger patients if used incorrectly. The problem is compounded by AI's output – systems like ChatGPT produce responses that sound convincing but require expertise to evaluate. Without proper education, clinicians may struggle to distinguish legitimate information from plausible-sounding fabulations.

The challenge extends beyond individual users. Castro noted that doctors must continue using their critical thinking rather than blindly following confident AI suggestions. To prevent deskilling, education must emphasize AI’s role as a decision-support tool, not a replacement for clinical judgment.

Recommended article

Article • Diagnostic assistant systems

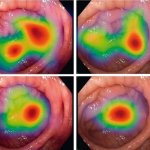

AI in endoscopy: helper, trainer – influencer?

Artificial intelligence (AI) is increasing its foothold in endoscopy. Although the algorithms often detect pathologies faster than humans, their use also generates new problems. PD Dr Alexander Hann from the University Hospital Würzburg points out that the use of AI helpers can affect not only the reporting of findings – but also the person making the findings.

Building trustworthy infrastructure

Dr. Markus Vogel, Chief Medical Information Officer at Microsoft, pointed to infrastructure as another critical hurdle. Healthcare needs AI systems that work as reliably as mobile phone networks – technology that users can depend on without needing to understand every technical detail. But this level of trust requires proof, he said: AI must demonstrate that it delivers better results than existing methods.

It's not enough to just put a prompt into a chat window

Markus Vogel

Creating that infrastructure means addressing context. Vogel emphasized that models need comprehensive knowledge databases and multimodal input to reach sound conclusions: ‘It's not enough to just put a prompt into a chat window,’ he cautioned – because without proper context, even sophisticated AI could easily produce potentially dangerous misinformation. The challenge is building systems that integrate seamlessly with clinical workflows while maintaining access to the information they need.

Vogel suggested that natural language interfaces will help make AI interactions more intuitive, reducing barriers to adoption. As AI becomes more agentic and develops a form of identity within healthcare systems, it could improve compliance and workflow integration. However, these advances require robust technical foundations that many healthcare organizations have yet to establish, he pointed out.

Foto: HiE/Behrends

Maintaining human oversight

Perhaps the most critical challenge addressed at the panel is ensuring adequate human supervision. Castro stressed that continuous monitoring is essential to prevent accidents, particularly because AI model outputs can drift over time. Developers must track performance metrics, while clinicians must maintain vigilant oversight of AI recommendations.

This creates a tension: AI promises to reduce workload, but responsible use requires ongoing attention. The solution lies in designing systems that make oversight manageable rather than burdensome. As Waclaw Lukowicz noted, ‘If we give doctors more time to actually treat their patients, it's a huge step forward.’ He advocated that AI should handle routine analysis and documentation, freeing clinicians to focus on patient interaction and complex decision-making – while remaining engaged enough to detect errors.

Setting realistic expectations

Photo: HiE/Behrends

The panel’s Chair and Moderator, Prof. Dr. Paul Lukowicz from the German Research Center for Artificial Intelligence (DFKI), highlighted the need for realistic risk assessment. No medical intervention comes with absolute guarantees of safety, he argued, warning of holding AI to the impossible standard of never causing harm. The challenge, he added, is evaluating AI like any other pill or medical procedure: assessing whether it improves outcomes and whether risks are acceptable and manageable.

This requires finding the middle ground between excessive fear and unwarranted optimism. Waclaw Lukowicz acknowledged that while AI's potential is enormous, the consequences of incorrect use could be catastrophic.

The path forward

Prof. Lukowicz offered a sobering conclusion: ‘AI itself isn't the danger – the real risks come from artificial and natural stupidity, from people placing either too much or too little trust in these powerful tools.’ Transforming AI into a true healthcare companion requires overcoming interconnected challenges: educating users, building reliable infrastructure, maintaining effective oversight, and setting realistic expectations.

These aren't purely technical problems. As Castro noted, varying regulations and standards across countries complicate implementation, though this is primarily a political rather than technological issue. Success will require coordination across disciplines – clinicians, developers, regulators, and administrators working together to create systems that augment human expertise while keeping patient safety paramount.

27.01.2026