Image source: Gwangju Institute of Science and Technology

News • Voice pathology detection

Detecting cancer from voice samples: improving the VPD method

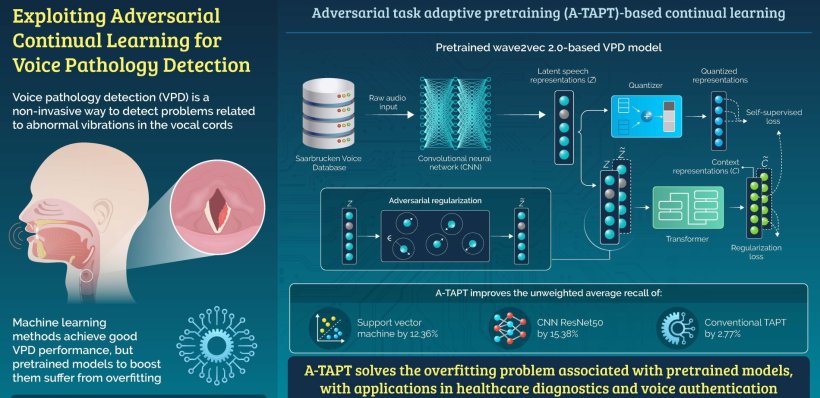

Voice pathology detection (VPD) is a non-invasive procedure that detects voice problems, due to conditions such as cancer and cysts, by differentiating abnormal vibrations in the vocal cords.

But pretrained VPD models can show a dip in performance due to overfitting when they are self-supervised. Recently, researchers from Gwangju Institute of Science and Technology (GIST) and Massachusetts Institute of Technology (MIT) have developed an adversarial task adaptive pretraining approach, which enhances the performance of a VPD model by over 15%, compared to the state-of-the-art ResNet50 model. Their article was published in the journal IEEE Signal Processing Letters.

Voice pathology refers to a problem arising from abnormal conditions, such as dysphonia, paralysis, cysts, and even cancer, that cause abnormal vibrations in the vocal cords (or vocal folds). In this context, voice pathology detection (VPD) has received much attention as a non-invasive way to automatically detect voice problems. It consists of two processing modules: a feature extraction module to characterize normal voices and a voice detection module to detect abnormal ones. Machine learning methods like support vector machines (SVM) and convolutional neural networks (CNN) have been successfully utilized as pathological voice detection modules to achieve good VPD performance. Also, a self-supervised, pretrained model can learn generic and rich speech feature representation, instead of explicit speech features, which further improves its VPD abilities. However, fine-tuning these models for VPD leads to an overfitting problem, due to a domain shift from conversation speech to the VPD task. As a result, the pretrained model becomes too focused on the training data and does not perform well on new data, preventing generalization.

By enabling early and accurate diagnosis of voice-related disorders, [our VPD research] could lead to more effective treatments, improving the quality of life of countless individuals

Dongkeon Park

To mitigate this problem, a team of researchers from GIST in South Korea, led by Prof. Hong Kook Kim, has proposed a groundbreaking contrastive learning method involving Wave2Vec 2.0—a self-supervised pretrained model for speech signals—with a novel approach called adversarial task adaptive pretraining (A-TAPT). Herein, they incorporated adversarial regularization during the continual learning process.

The researchers performed various experiments on VPD using the Saarbrucken Voice Database, finding that the proposed A-TAPT showed a 12.36% and 15.38% improvement in the unweighted average recall (UAR), when compared to SVM and CNN ResNet50, respectively. It also achieved a 2.77% higher UAR than the conventional TAPT learning. This shows that A-TAPT is better at mitigating the overfitting problem.

Talking about the long-term implications of this work, Mr. Dongkeon Park, who is the first author of this article, says: “In a span of five to 10 years, our pioneering research in VPD, developed in collaboration with MIT, may fundamentally transform healthcare, technology, and various industries. By enabling early and accurate diagnosis of voice-related disorders, it could lead to more effective treatments, improving the quality of life of countless individuals.”

The research, performed as part of a GIST funded project entitled ‘Extending Contrastive Learning to New Data Modalities and Resource-Limited Scenarios’ in collaboration with the MIT, Cambridge, MA, USA, embarks on a path that promises to redefine the landscape of VPD and artificial intelligence in medical applications. The project team includes Hong Kook Kim (EECS, GIST) and Dina Katabi (EECS, MIT) as Principal Investigators (PIs) as well as Jeany Son (AI Graduate School, GIST), Moongu Jeon (EECS, GIST), and Piotr Indyk (EECS, MIT) as co-PIs.

Prof. Kim points out: “Our partnership with MIT has been instrumental in this success, facilitating ongoing exploration of contrastive learning. The collaboration is more than a mere partnership; it’s a fusion of minds and technologies that strive to reshape not only medical applications but various domains requiring intelligent, adaptive solutions.”

Furthermore, it is promising for health monitoring in vocally demanding professions like call center agent, ensuring robust voice authentication in security systems, making artificial intelligence voice assistants more responsive and adaptive, and developing tools for voice quality enhancement in the entertainment industry.

Source: Gwangju Institute of Science and Technology

20.10.2023