Image source: Ulm University; photo: Elvira Eberhardt

News • Funding for research group

How can explainable AI in medicine work?

Artificial intelligence is being used more and more frequently in medicine. But how can we be sure that diagnoses are really accurate and can be understood by doctors? And who is responsible in case of incorrect treatment?

The new, interdisciplinary research training group "Knowledge Infusion and Extraction for Explainable Medical AI" (KEMAI) at Ulm University is dedicated to answering these questions, with a total of 27 doctoral students conducting research at the interface of computer science, medicine and ethics from 2025 onwards. The German Research Foundation (DFG) is funding KEMAI with an initial six million euros over five years.

Computer-aided procedures have been standard in medicine for decades and help doctors make diagnoses. Thanks to artificial intelligence and machine learning, diagnostic imaging procedures in particular are becoming increasingly important and precise, but also more complex. Starting next year, the new research training group will be researching how the advantages of knowledge and learning-based AI systems can be combined for image-based medical diagnoses. This is about both accuracy and enabling doctors to understand and comprehend the decisions made by AI systems. "We hope that better traceability will significantly strengthen the use and acceptance of AI in medicine," explains Professor Timo Ropinski from the Institute of Media Informatics and KEMAI spokesperson.

We don't want a diagnostic machine, but rather decision support. The diagnosis should still be made by doctors

Birte Glimm

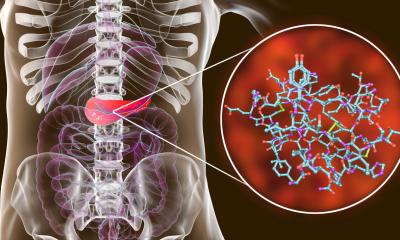

Scientists from the fields of computer science, medicine, ethics and philosophy will work together in the interdisciplinary research group. "That is KEMAI's great strength," says Professor Ropinski. The researchers want to focus on Covid-19, lung carcinomas and the fox tapeworm using computed tomography and positron emission imaging as well as other clinical imaging techniques. In addition to these application examples, ethical issues in medical decision-making processes will also be addressed: When do medical professionals trust the AI system? How can such a system indicate unsafe decisions? Who is liable for incorrect treatment? "It's about communication between the system and the doctor," explains co-spokesperson Professor Birte Glimm from the Institute for Artificial Intelligence. "We don't want a diagnostic machine, but rather decision support. The diagnosis should still be made by doctors." However, AI systems should be able to explain to doctors in the best possible way how a prediction was made. This explainability is also essential for the certification of new, AI-supported medical products. To ensure that sufficient computing power is available for these large amounts of data and machine learning, a new machine learning cluster is also to be set up at Ulm University in the context of KEMAI.

The ambitious KEMAI research training group aims to lead doctoral students to a doctorate within four years, giving them a head start on the job market. A structured qualification programme and innovative research at the highest level go hand in hand. KEMAI is integrated into the International Graduate School in Molecular Medicine Ulm (IGradU), which was founded in 2007 as part of the Excellence Initiative, and thus benefits from structures that have already been successfully established.

Source: Ulm University

02.06.2024