Article • Neuro-research

Brain-computer interfaces: Getting a Grasp on how we think

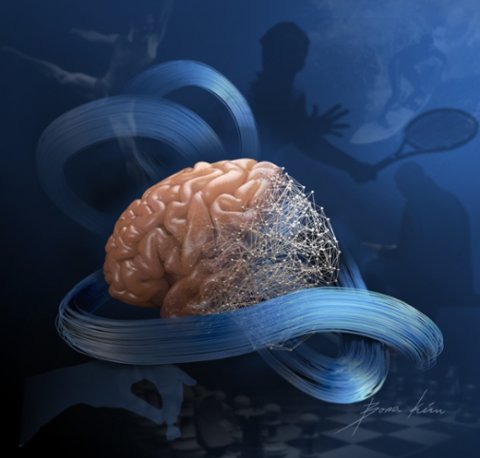

A world where machines can be controlled by thought alone – such is the promise of so-called brain-computer interfaces (BCI).

Report: Sascha Keutel

BCIs are both hardware and software communication systems that read brain and nerve signals, convert those into electrical signals and translate human thoughts into machine commands. Developers of BCIs rely on artificial intelligence, neural network models and big data to mitigate the effects of Alzheimer's, Parkinson’s, epilepsy, and other neurodegenerative conditions, to develop even better prostheses, or ultimately to improve the human cognition.

© MoreGrasp

The beginning of the MoreGrasp project was marked by the idea of a ground-breaking further development of grasp neuroprosthetics activated by thought control. The aim was to develop a sensoric grasp neuroprosthesis to support daily life activities of people living with severe to completely impaired hand function due to spinal cord injuries. The motor function of the neuroprosthesis was to be intuitively controlled by means of a brain-computer interface with emphasis on natural motor patterns.

A mental detour

In tetraplegia, all circuits in the brain and muscles in the body areas concerned are still intact, but the neurological connection between the brain and limb is interrupted

Gernot Müller-Putz

After the three-year project came to an end, the breakthrough was reported by members of the project consortium, led by Gernot Müller-Putz, head of the Institute of Neural Engineering at TU Graz, which include the University of Heidelberg, University of Glasgow, two companies – Medel Medizinische Elektronik and Bitbrain – as well as the Know Centre. ‘In tetraplegia, all circuits in the brain and muscles in the body areas concerned are still intact, but the neurological connection between the brain and limb is interrupted. We bypass this by communicating via a computer, which in turn passes on the command to the muscles,’ explains Gernot Müller-Putz, head of the Institute of Neural Engineering at TU Graz. The muscles are controlled and encouraged to move by electrodes attached to the outside of the arm and they can, for example, trigger the closing and opening of the fingers.

This mental ‘detour’ of any movement pattern, so long as it is clearly distinguishable, is no longer necessary, as Müller-Putz explains. ‘We now use so-called “attempted movement”.’ In doing so, the test subject attempts to carry out the movement – for instance, to grasp a glass of water. Due to the tetraplegia, the occurring brain signal is not passed on, but can be measured through an EEG and processed by the computer system. Müller-Putz is extremely pleased with the success of the research. ‘We are now working with signals that only differ from each other very slightly; nevertheless we manage to control the neuroprosthesis successfully. For users, this results in a completely new possibility of making movement sequences easier – especially during training.’

Decoding conversations in the brain’s motor cortex

How does the brain talk with our arm? The body doesn’t use English, or any other spoken language. Biomedical engineers are developing methods for decoding the conversation, by analysing electrical patterns in the motor control areas of the brain. In this study, the researchers at the Emory University leveraged advances from the field of ‘deep learning’. The computing approaches, which use artificial neural networks, let researchers uncover patterns in complex data sets that have previously been overlooked, lead author Dr Chethan Pandarinath points out.

The research team developed an approach to allow their artificial neural networks to mimic the biological networks that make our everyday movements possible. In doing so, the researchers gained a much better understanding of what the biological networks were doing. Eventually, these techniques could help paralysed people to move their limbs, or improve the treatment of people with Parkinson’s, says Pandarinath, an assistant professor in the Wallace H Coulter Department of Biomedical Engineering at Georgia Tech and Emory University, USA.

For someone who has a spinal cord injury, the new technology could power ‘brain-machine interfaces’ that discern the intent behind the brain’s signals and directly stimulate someone’s muscles. ‘In the past, brain-machine interfaces have mostly worked by trying to decode very high-level commands, such as, “I want to move my arm to the right, or left”,’ Pandarinath points out. ‘With these new innovations, we believe we’ll actually be able to decode subtle signals related to the control of muscles, and make brain-machine interfaces that behave much more like a person’s own limbs.’

11.11.2018