Image credit: AHRI

News • Deep learning vs Aids

AI app could help diagnose HIV more accurately

Pioneering technology developed by University College London (UCL) and Africa Health Research Institute (AHRI) researchers could transform the ability to accurately interpret HIV test results, particularly in low- and middle-income countries.

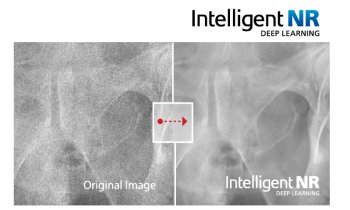

Academics from the London Centre for Nanotechnology at UCL and AHRI used deep learning (artificial intelligence/AI) algorithms to improve health workers’ ability to diagnose HIV using lateral flow tests in rural South Africa. Their findings, published in Nature Medicine, involve the first and largest study of field-acquired HIV test results, which have applied machine learning to help classify them as positive or negative.

More than 100 million HIV tests are performed around the world annually, meaning even a small improvement in quality assurance could impact the lives of millions of people by reducing the risk of false positives and negatives. By harnessing the potential of mobile phone sensors, cameras, processing power and data sharing capabilities, the team developed an app that can read test results from an image taken by end users on a mobile device. It may also be able to report results to public health systems for better data collection and ongoing care.

Lateral flow tests – or rapid diagnostic tests (RDTs) – have been used throughout the Covid-19 pandemic and play an important role in disease control and screening. While they provide a quick and easy way of testing outside of clinical settings, including self-testing, interpretation of test results can sometimes be challenging for lay people.

As digital health research moves into the mainstream, there remain serious concerns that those populations most at need around the world will not benefit as much as those in high income settings

Deenan Pillay

Self-testing relies on people self-reporting results for clinical support and surveillance purposes. Evidence suggests that some lay caregivers can struggle to interpret RDTs because of colour blindness or short-sightedness. The new study examined whether an AI app could support HIV testing decisions made by fieldworkers, nurses and community health workers.

A team of more than 60 trained field workers at AHRI first helped build a library of more than 11,000 images of HIV tests taken in various conditions in the field in KwaZulu-Natal, South Africa, using a mobile health tool and image capture protocol developed by UCL. The UCL team then used these images as training data for their machine-learning algorithm. They compared how accurately the algorithm classified images as either negative or positive, versus users interpreting test results by eye. Lead author and Director of i-sense Professor Rachel McKendry (UCL London Centre for Nanotechnology and UCL Division of Medicine) said: “This study is a really strong partnership with AHRI that demonstrates the power of using deep learning to successfully classify ‘real-world’ field-acquired rapid test images, and reduce the number of errors that may happen when reading test results by eye. This research shows the positive impact the mobile health tools can have in low- and middle-income countries, and paves the way for a larger study in the future.”

A pilot field study of five users of varying experience (ranging from nurses to newly trained community health workers) involved them using the mobile app to record their interpretation of 40 HIV test results, as well as capture a picture of the tests to automatically be read by the machine learning classifier. All participants were able to use the app without training. The machine learning classifier was able to reduce errors in reading RDTs, correctly classifying RDT images with 98.9% accuracy overall, compared to traditional interpretation of the tests by eye (92.1%). A previous study of users of varying experience in interpreting HIV RDTs showed the accuracy varied between 80% and 97%. Other diseases that RDTs could support include malaria, syphilis, tuberculosis, influenza and non-communicable diseases.

Image credit: AHRI

First author Dr Valérian Turbé (UCL London Centre for Nanotechnology) and i-sense researcher in the McKendry group said: “Having spent some time in KwaZulu-Natal with fieldworkers organising the collection of data, I’ve seen how difficult it is for people to access basic healthcare services. If these tools can help train people to interpret the images, you can make a big difference in detecting very early-stage HIV, meaning better access to healthcare or avoiding an incorrect diagnosis. This could have massive implications on people’s lives, especially as HIV is transmissible.” The team now plan a larger evaluation study to assess the performance of the system, with users of differing ages, gender and levels of digital literacy.

A digital system has also been designed to connect to laboratory and healthcare management systems, where RDT deployment and supply can be better monitored and managed. AHRI Clinical Research Faculty Lead, Professor Maryam Shahmanesh (UCL Institute for Global Health), said: “Trials we have conducted in the area have found that HIV self-testing is effective in reaching large numbers of adolescents and young men. However, HIV self-testing has been less successful in linking people to biomedical prevention and treatment. A digital system that connects a test result and the person to healthcare, including linkage to antiretroviral therapy and pre-exposure prophylaxis, has the potential to decentralise HIV prevention and deliver on UNAIDS goals to eliminate HIV.” Dr Kobus Herbst, AHRI’s Population Science Faculty lead, added: “This study shows how machine learning approaches can benefit from large and diverse datasets available from the global South, but at the same time be responsive to local health priorities and needs.”

The researchers also suggest that real-time reporting of RDT results through a connected device could help in workforce training and outbreak management, for example by highlighting ‘hotspots’ where positive tests numbers are high. They are currently extending the approach to other infections including Covid-19, and non-communicable disease. Former AHRI Director Professor Deenan Pillay (UCL Infection & Immunity), said: “As digital health research moves into the mainstream, there remain serious concerns that those populations most at need around the world will not benefit as much as those in high income settings. Our work demonstrates how, with appropriate partnerships and engagement, we can demonstrate utility and benefit for those in low- and middle-income settings.”

Source: University College London

08.07.2021