Image source: Unsplash/ilgmyzin (ChatGPT logo); user query text from: Johnson et al., Cancer Spectrum 2023 (CC BY 4.0)

News • Study on chatbot reliability and accuracy

Can you count on ChatGPT for cancer information?

A new study looked at chatbots and artificial intelligence (AI), as they become popular resources for cancer information.

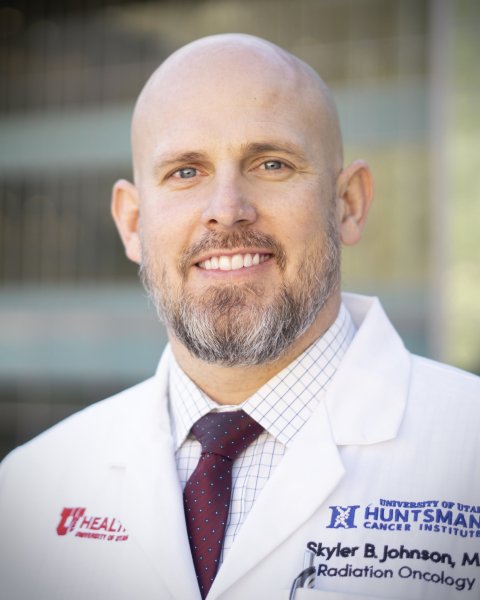

Image source: University of Utah

They found these resources give accurate information when asked about common cancer myths and misconceptions. In the first study of its kind, Skyler Johnson, MD, physician-scientist at Huntsman Cancer Institute and assistant professor in the department of radiation oncology at the University of Utah (the U), evaluated the reliability and accuracy of ChatGPT’s cancer information. The study was published in the Journal of The National Cancer Institute Cancer Spectrum.

Using the National Cancer Institute’s (NCI) common myths and misconceptions about cancer, Johnson and his team found that 97% of the answers were correct. However, this finding comes with some important caveats, including a concern amongst the team that some of the ChatGPT answers could be interpreted incorrectly. “This could lead to some bad decisions by cancer patients. The team suggested caution when advising patients about whether they should use chatbots for information about cancer,” says Johnson.

These sources need to be studied so that we can help cancer patients navigate the murky waters that exist in the online information environment as they try to seek answers about their diagnoses

Skyler Johnson

The study found reviewers were blinded, meaning they didn’t know whether the answers came from the chatbot or the NCI. Though the answers were accurate, reviewers found ChatGPT’s language was indirect, vague, and in some cases, unclear. “I recognize and understand how difficult it can feel for cancer patients and caregivers to access accurate information,” says Johnson. “These sources need to be studied so that we can help cancer patients navigate the murky waters that exist in the online information environment as they try to seek answers about their diagnoses.”

Incorrect information can harm cancer patients. In a previous study by Johnson and his team published in the Journal of the National Cancer Institute, they found that misinformation was common on social media and had the potential to harm cancer patients.

The next steps are to evaluate how often patients are using chatbots to seek out information about cancer, what questions they are asking, and whether AI chatbots provide accurate answers to uncommon or unusual questions about cancer.

Source: University of Utah

21.03.2023