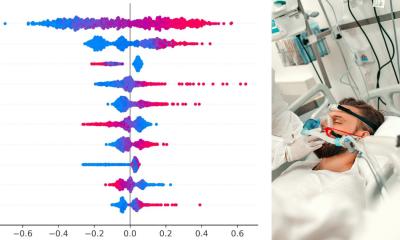

News • Study shows lack of reliability

Can an AI predict Covid-19 from cough sounds? Not really, study finds

Researchers found that technology using Machine Learning performed no better than simply asking people to report their symptoms.

Image source: Adobe Stock/pathdoc

Artificial Intelligence (AI) is unable to accurately predict whether someone has Covid-19 based on the sound of their cough, according to a study involving statisticians from the Department of Mathematics at King’s College London. By analysing the accuracy of Machine Learning (ML) algorithms to detect Covid-19 infections, researchers found that this technology did not improve on a model that only used symptoms reported by individuals themselves along with their demographic data, such as age and gender.

Commissioned by the UK Health Security Agency, as part of the government’s pandemic response, researchers conducted an independent review of how ML algorithms performed as a Covid-19 screening tool to determine whether AI classifiers could be used as a potential alternative to lateral flow tests, which could be less expensive, less environmentally wasteful and more accurate.

Led by the Alan Turing Institute and Royal Statistical Society, the project involved a team including Professor Steven Gilmour, Dr Davide Pigoli, Dr Vasiliki Koutra and Kieran Baker from the Department of Mathematics at King’s, together with researchers from the University of Oxford, Imperial College London and University College London. The team collected and analysed a dataset of audio recordings from 67,842 individuals recruited in part via the NHS Test-and-Trace programme and the REACT-1 study, who had also taken a PCR test. Participants were asked to record themselves coughing, breathing and talking. Results of their PCR tests revealed over 23,000 of them had tested positive for Covid-19.

Almost all people in our dataset with Covid-19 have some symptoms, and so the model is learning that if you have symptoms in the audio, this is a proxy for Covid-19 infection, and no respiratory symptoms means Covid-free

Kieran Baker

Researchers then trained a ML model on these audio recordings, comparing them with people's Covid-19 test results. At first, in an unadjusted analysis of the data, the AI classifiers appeared to predict Covid-19 infection with high accuracy – consistent with the findings of previous studies, including research by Massachusetts Institute of Technology, which reported up to 98.5 per cent accuracy from AI classifiers predicting whether someone had Covid-19 based on audio recordings.

However with continued analysis the results from this latest study revealed something different. “When we grouped participants of the same ages, genders and symptoms into pairs, with only one of them having Covid-19, and evaluated these models on the matched data, the AI models failed to perform well in terms of accuracy,” said Kieran Baker, PhD student at King’s College London and research assistant at the Alan Turing Institute. “It appeared that the accuracy was due to an effect in statistics called confounding. Almost all people in our dataset with Covid-19 have some symptoms, and so the model is learning that if you have symptoms in the audio, this is a proxy for Covid-19 infection, and no respiratory symptoms means Covid-free. It thus overdiagnosed the number of Covid-19 cases. This confounding is caused by a phenomenon called recruitment bias, because Test and Trace only recruited people with symptoms – so the sample wasn’t representative of the population at large.”

These findings provide evidence that audio-based AI classifiers in practical settings were not able to improve over simple predictive scores based on users reporting their symptoms.

Whilst the study did not return a positive outcome in terms of offering a novel solution to screening and diagnosing diseases such as Covid-19, the researchers were able to introduce new methods for characterising complex, high-dimensional bias, and make best-practice recommendations for handling recruitment bias. The findings also offered fresh insight for assessing audio-based classifiers by their utility in relevant practical settings.

Professor Stephen Gilmour, Head of the Department of Mathematics said: “This research is timely in highlighting the need for caution when it comes to constructing machine learning evaluation procedures, aimed at yielding representative performance metrics. The important lessons from this case study on the effects of confounding extend across many applications in AI — where biases are often hard to spot and difficult to control for.”

Kieran Baker added: “It is still possible that technologies that use AI audio classifiers like this could work in the future. Recent publications show progress in detecting sleep apnea and chronic obstructive pulmonary disease (COPD) from audio recordings. But in order to be certain that this is really working as we hope, it is vital that these models, alongside the data, go through robust model development and testing.”

Source: King's College London

05.03.2023