Image source: Mikael Häggström, CT of a normal brain, sagittal 20 / Helmut Januschka, Mrt big, mashup & colorization by HiE/Behrends (CC BY-SA 3.0)

News • Research explores DBGAN method

AI fuses CT and MRI scans for better diagnostics

Research shows how artificial intelligence (AI) can be used to fuse images from clinical X-ray computed tomography (CT) and magnetic resonance imaging scans.

The research is published in the International Journal of Biomedical Engineering and Technology.

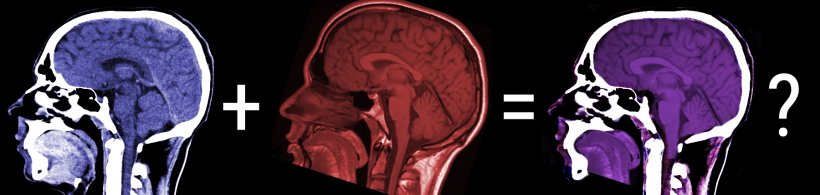

The method, known as the Dual-Branch Generative Adversarial Network (DBGAN), has the potential to allow a clearer and more clinically useful interpretation of CT and MRI scans to be carried out. Essentially, combining the hard, bone, structures of the CT scan with the soft tissue detail of the MRI image. The work could improve clinical diagnosis and enhance patient care for a wide range of conditions where such scans are commonly used but where each has limitations when used alone.

CT imaging utilizes X-ray technology to capture detailed cross-sectional images of the body or part of the body, which are converted into a three-dimensional representation of bone, which are opaque to X-rays. In contrast, MRI uses employs strong magnetic fields and radio waves to produce precise images of soft tissues, such as organs or diseased or damaged tissues. The potential of merging both modalities could give clinicians a more comprehensive representation of a patient's anatomy and reveal otherwise hidden details of their physical problems.

Fundamentally, the DBGAN fusion retains the bone structure details commonly accessible with a CT image and the soft tissue information provided by an MRI scan

Zhai et al.

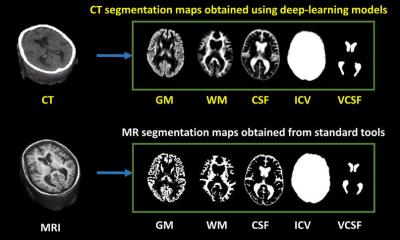

Wenzhe Zhai, Wenhao Song, Jinyong Chen, Guisheng Zhang, and Mingliang Gao of Shandong University of Technology in Zibo, China, and Qilei Li of Queen Mary University of London, United Kingdom have used DBGAN to carry out their CT and MRI fusion. DBGAN is an advanced AI technique based on deep-learning algorithms. It features a dual-branching structure consisting of multiple generators and discriminators. The generators are responsible for creating fused images that combine the salient features and complementary information from CT and MRI scans.

The discriminators essentially assess the quality of the generated images by comparing them with real images and discarding those that are of lower quality until a high-quality fusion is achieved. This generative adversarial relationship between generators and discriminators allows the AI to fuse the CT and MRI images efficiently and realistically so that artefacts are minimised and visual information maximised.

The duality of the DBGAN approach uses a multiscale extraction module (MEM) which focuses on extracting important features and detailed information from the CT and MRI scans and a self-attention module (SAM) which highlights the most relevant and distinctive features in the fused images.

The team has carried out thorough testing of their proposed DBGAN approach with both subjective and objective assessments. It proves itself to have superior performance compared to existing techniques in terms of image quality and diagnostic accuracy. Given that CT and MRI scans individually have strengths and weaknesses, the use of AI could allow radiographers to fuse synergistically both types of scan, combining the strengths of each and discarding the weaknesses. Fundamentally, the team explains, the DBGAN fusion retains the bone structure details commonly accessible with a CT image and the soft tissue information provided by an MRI scan.

Source: Inderscience

03.07.2023