Image credit: Virginia Tech; photo by Tonia Moxley

News • Responsiveness of machine learning models

Will an AI recognize patient deterioration in the ICU? Not quite yet

It would be greatly beneficial to physicians trying to save lives in intensive care units if they could be alerted when a patient’s condition rapidly deteriorates or shows vitals in highly abnormal ranges.

While current machine learning models are attempting to achieve that goal, a Virginia Tech study recently published in Communications Medicine shows that they are falling short with models for in-hospital mortality prediction, which refers to predicting the likelihood of a patient dying in the hospital, failing to recognize 66% of the injuries.

“Predictions are only valuable if they can accurately recognize critical patient conditions. They need to be able to identify patients with worsening health conditions and alert doctors promptly,” said Danfeng “Daphne” Yao, professor in the Department of Computer Science and affiliate faculty at the Sanghani Center for Artificial Intelligence and Data Analytics. “Our study found serious deficiencies in the responsiveness of current machine learning models,” said Yao. “Most of the models we evaluated cannot recognize critical health events and that poses a major problem.”

A more fundamental design is to incorporate medical knowledge deeply into clinical machine learning models

Danfeng "Daphne" Yao

To conduct their research, Yao and computer science Ph.D. student Tanmoy Sarkar Pias collaborated with Sharmin Afrose (Oak Ridge National Laboratory, Tennessee), Moon Das Tuli (Greenlife Medical College Hospital, Dhaka, Bangladesh), Ipsita Hamid Trisha (Banner University Medical Center, Tucson, and University of Arizona College of Medicine), Xinwei Deng (Department of Statistics at Virginia Tech), and Charles B. Nemeroff (Department of Psychiatry and Behavioral Sciences, University of Texas at Austin Dell Medical School). Their paper shows patient data is not enough to teach models how to determine future health risks. Calibrating health care models with “test patients” helps reveal the models’ true ability and limitations.

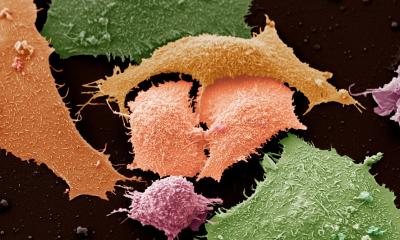

The team developed multiple medical testing approaches, including a gradient ascent method and neural activation map. Color changes in the neural activation map indicate how well machine learning models react to worsening patient conditions. The gradient ascent method can automatically generate special test cases, making it easier to evaluate the quality of a model. “We systematically assessed machine learning models’ ability to respond to serious medical conditions using new test cases, some of which are time series, meaning they use a sequence of observations collected at regular intervals to forecast future values,” Pias said. “Guided by medical doctors, our evaluation involved multiple machine learning models, optimization techniques, and four data sets for two clinical prediction tasks.”

In addition to models failing to recognize 66% of injuries for in-hospital mortality prediction, the models failed to generate, in some instances, adequate mortality risk scores for all test cases. The study identified similar deficiencies in the responsiveness of five-year breast and lung cancer prognosis models.

These findings inform future health care research using machine learning and artificial intelligence (AI), Yao said, because they show that statistical machine learning models trained solely from patient data are grossly insufficient and have many dangerous blind spots. To diversify training data, one may leverage strategically developed synthetic samples, an approach Yao’s team explored in 2022 to enhance prediction fairness for minority patients. “A more fundamental design is to incorporate medical knowledge deeply into clinical machine learning models,” she said. “This is highly interdisciplinary work, requiring a large team with both computing and medical expertise."

In the meantime, Yao’s group is actively testing other medical models, including large language models, for their safety and efficacy in time-sensitive clinical tasks, such as sepsis detection. "AI safety testing is a race against time, as companies are pouring products into the medical space," she said. “Transparent and objective testing is a must. AI testing helps protect people’s lives and that’s what my group is committed to.”

Source: Virginia Tech

11.03.2025