Image source: Juntendo University; from: Wada et al., npj Digital Medicine 2025

News • Retrieval-augmented generation in contrast media consultations

Eliminating LLM hallucinations in radiology with RAG

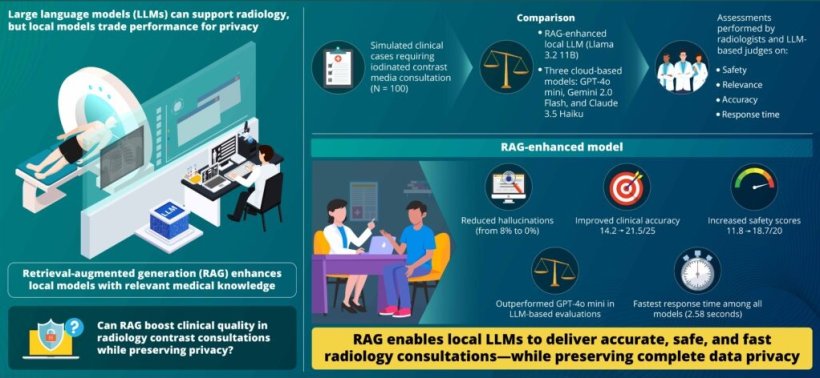

Large language models (LLMs) can support clinical decision-making, but local versions often underperform compared to cloud-based ones.

Japanese researchers tested whether retrieval-augmented generation (RAG) could enhance a local model’s performance in radiology contrast media consultations. In 100 synthetic cases, the RAG-enhanced model showed no hallucinations, faster responses, and better rankings by AI judges. These results, published online in npj Digital Medicine, suggest RAG can significantly boost the clinical usefulness of local LLMs while protecting sensitive patient data onsite.

We believe this represents a new era of AI-assisted medicine, one where clinical excellence and patient privacy go hand in hand

Akihiko Wada

In modern hospitals, timely and accurate decision-making is essential—especially in radiology, where contrast media consultations often require rapid answers rooted in complex clinical guidelines. Yet, physicians are frequently forced to make these decisions under pressure, without immediate access to all relevant information. This challenge is particularly critical for institutions that must also safeguard patient data by avoiding cloud-based tools.

In the new study, a team of researchers led by Associate Professor Akihiko Wada from Juntendo University, Japan, demonstrated that RAG, a technique that enables AI to consult trusted sources during response generation, can significantly improve the safety, accuracy, and speed of locally deployed LLMs for radiology contrast media consultations. The study was co-authored by Dr. Yuya Tanaka from The University of Tokyo, Dr. Mitsuo Nishizawa from Juntendo University Urayasu Hospital, and Professor Shigeki Aoki from Juntendo University Graduate School of Medicine.

Recommended article

Article • Conversational AI in medicine

How to teach an LLM to think like a clinician

While generative AI shows immense potential for healthcare, a critical reliability issue lurks beneath the surface: LLMs don't think like doctors do, a data science expert explained at the Emerging Technologies in Medicine (ETIM) congress in Essen. This potentially fatal flaw, however, may be fixable, he suggested.

The team developed a RAG-enhanced version of a local language model and tested it on 100 simulated cases involving iodinated contrast media, a common component in computed tomography imaging. These consultations typically require real-time risk assessments based on factors like kidney function, allergies, and medication history. The enhanced model was compared to three leading cloud-based AIs—GPT-4o mini, Gemini 2.0 Flash, and Claude 3.5 Haiku—as well as its own baseline version, a standard LLM.

The results were striking. The RAG-enhanced model completely eliminated dangerous hallucinations (from 8% to 0%) and responded significantly faster than the cloud-based systems (2.6 seconds on average, compared to 4.9–7.3 seconds). While cloud models performed well, the RAG-enhanced system closed the performance gap, delivering safer and faster results, all while keeping sensitive medical data onsite. “For clinical use, reducing hallucinations to zero is a safety breakthrough,” says Dr. Wada. “These hallucinations can lead to incorrect recommendations about contrast dosage or missed contraindications. Our system generated accurate, guideline-based responses without making those mistakes.” Notably, the model also ran efficiently on standard hospital computers, making it accessible without costly hardware or cloud subscriptions—especially valuable for hospitals with limited radiology staff.

The inspiration for this work came directly from clinical experience. “We frequently encounter complex contrast media decisions that require consulting multiple guidelines under time pressure,” recalls Dr. Wada. “For example, cases involving patients with multiple risk factors – reduced kidney function, medication interactions, or allergy histories. We realized that AI could streamline this process, but only if we could keep sensitive patient data within our institution.” The RAG-enhanced model operates by dynamically retrieving relevant information from a curated knowledge base, including international radiology guidelines and institutional protocols. This ensures each response is grounded in verified, up-to-date medical knowledge rather than solely relying on pre-trained data.

Beyond radiology, the researchers envision this technology being applied to emergency medicine, cardiology, internal medicine, and even medical education. It could also be a game-changer for rural hospitals and healthcare providers in low-resource settings by offering instant access to expert-level guidance.

Overall, this study represents a major breakthrough in clinical AI—proving that it is possible to achieve expert-level performance without compromising patient privacy. The RAG-enhanced model paves the way for safer, more equitable, and immediately deployable AI solutions in healthcare. As hospitals worldwide seek to balance technological advancement with ethical responsibility, this research offers a practical and scalable path forward. “We believe this represents a new era of AI-assisted medicine,” says Dr. Wada. “One where clinical excellence and patient privacy go hand in hand.”

Source: Juntendo University

09.07.2025