News • Memory-Driven Computing

Time lapse for dementia research

The German Center for Neurodegenerative Diseases (DZNE) is just starting the operation of a new high-performance computer in Bonn. It should significantly accelerate the evaluation of biomedical data and thus lead to faster progress in dementia research. For this the computer uses the principles of the novel computer architecture "Memory-Driven Computing".

Time is running out: Dementias such as Alzheimer's disease are already one of the biggest challenges in medicine today. The problem will increase due to the growing elderly population. New approaches to prevention and therapy could result from the analysis of genomic and brain imaging data. But their evaluation requires enormous computing power. Therefore, the DZNE and Hewlett Packard Enterprise (HPE) have started a collaboration to realize the potential of Memory-Driven Computing for medical research. The DZNE is the first institute in the world to use this radically new computer architecture for biomedical research.

Following a joint feasibility study, DZNE and HPE now take the next step: The new high-performance computer "HPE Superdome Flex" has just been put into operation at the DZNE’s computing center in Bonn. So far, the DZNE scientists had tested their algorithms on HPE computers in the USA. Already with that, it was possible to shorten the time of a genomic computing process from 22 minutes to 13 seconds. Now the Bonn researchers have their own system available.

A completely new architecture

Working with external storage media is like a jigsaw puzzler who has distributed the pieces of the puzzle in a large number of boxes

Joachim Schultze

Memory-Driven Computing, developed by HPE, breaks radically with the tradition of all previous computers by placing not the processor but the memory at the center of the architecture: "Ideally, all data is stored in the huge memory at the same time," says Prof. Joachim Schultze, genome researcher and a senior scientist at the DZNE. "This means you do not have to read data from external storage media, but the processor can access it directly." This saves a lot of time and energy. Schultze adds: "Working with external storage media is like a jigsaw puzzler who has distributed the pieces of the puzzle in a large number of boxes, which he has to open one after another to search for suitable parts. On the other hand, if you have laid out all the pieces in front of you, you can get to your goal much faster." This is similar in Memory-Driven Computing, according to Schultze.

The Superdome Flex computer was developed by HPE based on the Memory-Driven Computing architecture. In addition, the hardware is designed for extremely fast data exchange. This makes it possible to provide the DZNE researchers with Memory-Driven Computing across disciplines. "Currently, we are working on the specifications for data processing. However, we want to start evaluating data coming from experiments and studies as soon as possible. Our vision is to compare genomic data from thousands of individuals within a few minutes", emphasizes Schultze.

A core problem of dementia research is the huge amount of data. This applies, for example, to the analysis of areas of the genome that are thought to be relevant for a disease. Such sequences can span hundreds of millions of genetic building blocks. Their evaluation and comparison with reference data can take several weeks with conventional high-performance-computers. With the help of the new computer, which is now available in Bonn, the DZNE researches want to significantly optimize their evaluation algorithms.

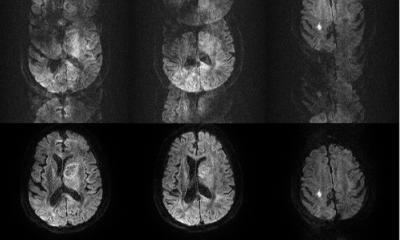

Another application arises from studies involving thousands of individuals. The collected data must be compared again and again with reference data of the other study participants. Hundreds of gigabytes of data can come together in just one single subject – that is, hundreds of thousands of millions of digital information units. This applies especially, if different types of data are linked together, as data coming from genome analysis and data from brain imaging. And such datasets have to be compared with a similarly huge amount of data from thousands of other subjects to find, for example, Alzheimer-specific changes. This task, searching petabytes of data (millions of billions information units) for symptoms, is not solvable sequentially. Rather, the DZNE researchers must have as much as possible of all the data simultaneously in direct access, that is, in the memory.

10,000 times faster

HPE's new approach responds to the fact that further increases in processor performance are reaching physical limits. This is a big problem given the exponentially increasing amounts of data that computers have to handle. However, the company has already demonstrated that Memory-Driven Computing can accelerate calculations many thousands of times. Since May 2017, HPE is running a prototype in its Fort Collins (USA) lab with 160 terabytes of memory – the largest single-memory system in the world. The architecture allows to scale the memory to 4,096 yottabytes. By comparison, that's 250,000 times the amount of data that exists on Earth today.

Source: German Center for Neurodegenerative Diseases (DZNE)

28.03.2018