Source: Nature Communications/CC BY

News • Machine learning

How intelligent is Artificial Intelligence?

Scientists put AI systems to the test und provide a glimpse into the diverse “intelligence” spectrum observed in current AI models.

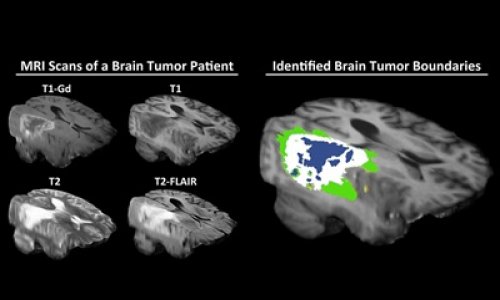

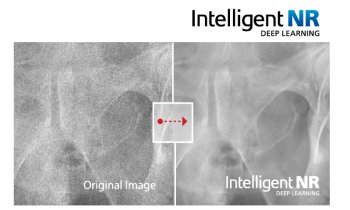

Artificial intelligence (AI) and machine learning algorithms such as deep learning are becoming integral parts of ever more areas of our lives: They enable digital language assistants or translation services, improve medical diagnostics and are indispensable for future technologies such as autonomous driving. Based on an ever-increasing quantity of data and powerful, novel computer architectures, the capacity of learning algorithms appears to be equal or even superior to that of humans. The issue: Until now scientists have had very little understanding of how AI systems make decisions. As such, it was often not clear if these decisions were intelligent or if they were just statistically successful.

Researchers at Technische Universität Berlin (TU Berlin), the Fraunhofer Heinrich Hertz Institute (HHI), and Singapore University of Technology and Design (SUTD) have tackled this question and have provided a glimpse into the diverse “intelligence” spectrum observed in current AI systems, specifically analyzing these systems using a novel technology that allows automatized analysis and quantification. Their work has been published in Nature Communications.

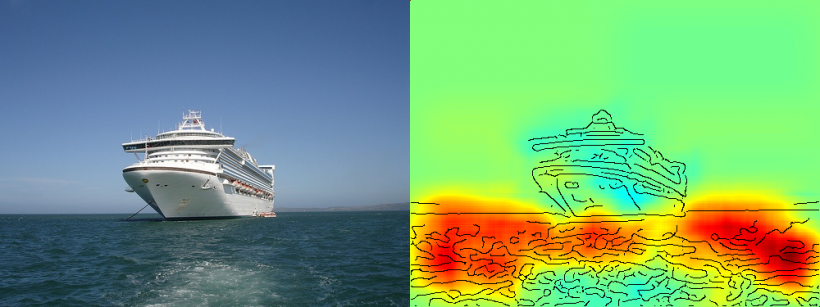

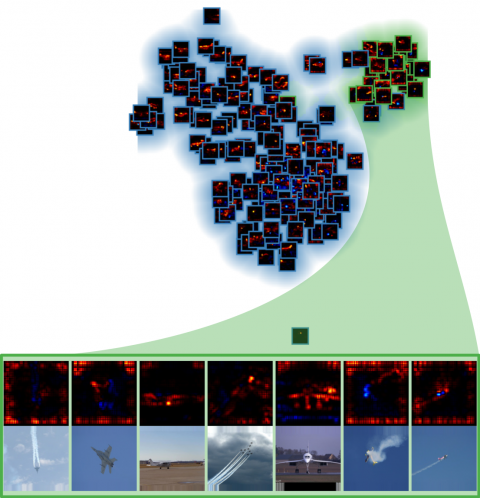

The most important prerequisite for this novel technology is layer-wise relevance propagation (LRP), a technology developed by TU Berlin and the HHI which allows visualizing on the basis of the criteria AI systems use when making decisions. Spectral Relevance Analysis (SpRAy), an extension of LRP technology, identifies and quantifies a wide spectrum of learned decision-making behavior. This also makes it possible to identify undesired decision making, even in very large datasets. “What we refer to as ‘explainable AI’ is one of the most important steps towards a practical application and dissemination of AI,” says Dr. Klaus-Robert Müller, professor of machine learning at TU Berlin. “We need to ensure that no AI algorithms with suspect problem-solving strategies or algorithms which employ cheating strategies are used, especially in such areas as medical diagnosis or safety-critical systems.”

Source: Nature Communications/CC BY

These newly developed algorithms have enabled scientists to not only test out existing AI systems but also derive quantitative information: A whole spectrum ranging from naive problem solving behaviors to cheating strategies to systems using highly elaborate “intelligent” strategic solutions is observed. Dr. Wojciech Samek, group leader at Fraunhofer HHI: “We were very surprised by just how broad the range of learned problem-solving strategies is. Even modern AI systems have not always found a meaningful approach, at least not from a human perspective, instead sometimes adopting what we call ‘Clever Hans’ strategies.”

Clever Hans was a horse who was believed to be able to count and do sums and who became a scientific sensation around 1900. It later emerged that Hans could not do math at all but was still able to provide the correct answer to 90 per cent of the sums he was asked to perform on the basis of the questioner’s reaction.

The team around Klaus-Robert Müller and Wojciech Samek discovered similar “Clever Hans” strategies in a number of AI systems. An example is provided by an AI system which some years ago won several international image classification competitions pursuing a strategy which can be considered naive from a human perspective: It classified images primarily on the basis of context. Images featuring a lot of water, for example, were assigned to the category of “ship”. Other images featuring rails were classified as “train”. While further images were correctly classified on the basis of the copyright watermark. In fact this AI system did not solve the task it was actually set, namely to identify ships or trains, even if it did ultimately correctly classify the majority of images.

Researchers were also able to identify such faulty problem-solving strategies in a number of state-of-the-art AI algorithms, so-called deep neuronal networks, which until now were considered immune to such lapses. These algorithms base their classification decisions in part on artifacts that were created during the preparation of the image and which have nothing to do with the actual image content. “Such AI systems are completely useless in practice. Indeed their use in medical diagnostics or in security-critical areas entails enormous dangers,” Klaus-Robert Müller warns: “It is quite conceivable that as many as half of the AI systems currently in use implicitly or explicitly rely on such “Clever Hans” strategies. We now need to systematically examine the situation so as to develop secure AI systems.

Using their new technology, researchers have, however, also identified AI systems which have unexpectedly learned “smart” strategies. Examples include systems that have learned to play the Atari games Breakout and Pinball. “In these cases, the AI systems quite clearly “understood” the concept of the games and were able to find an intelligent system to collect a lot of points in a targeted manner, while taking few risks. Sometimes the system intervenes in ways a real player would not use,” says Wojciech Samek.

“Beyond understanding AI strategies, our work establishes the usability of explainable AI for iterative dataset design, namely for removing artefacts in a dataset which would cause an AI to learn flawed strategies. In addition, it helps to decide which unlabeled examples need to be annotated and added so that failures of an AI system can be reduced,” adds Alexander Binder, Assistant Professor at SUTD.

“Our automated technology is open source and available to all scientists. We see our work as a first important step of many towards making AI systems more robust, explainable and secure in the future. This is an essential prerequisite for general use of AI,” concludes Klaus-Robert Müller.

Source: TU Berlin

12.03.2019