News • Machine learning

Google AI now can predict cardiovascular problems from retinal scans

This is a big step forward, Google officials said, because the tech is not imitating an existing diagnostic but rather using machine learning to uncover a new way to predict health problems.

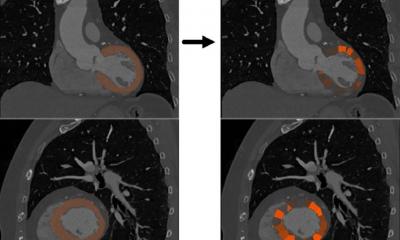

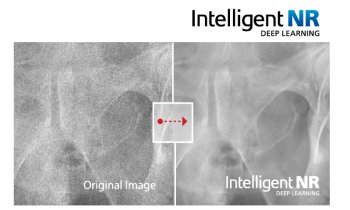

Google AI has made a breakthrough: successfully predicting cardiovascular problems such as heart attacks and strokes simply from images of the retina, with no blood draws or other tests necessary. What's more, the new system shows what parts of the eye image lead to successful predictions, giving researchers new leads into what causes cardiovascular disease. The results of the Google AI research have been published in an article entitled "Prediction of Cardiovascular Risk Factors from Retinal Fundus Photographs via Deep Learning" in Nature Biomedical Engineering.

Our algorithm could distinguish the retinal images of a smoker from that of a non-smoker 71 percent of the time

Lily Peng

"Using deep learning algorithms trained on data from 284,335 patients, we were able to predict CV risk factors from retinal images with surprisingly high accuracy for patients from two independent data sets of 12,026 and 999 patients," Lily Peng, MD, product manager and a lead on these efforts within Google AI, wrote in the Google AI official blog. "For example, our algorithm could distinguish the retinal images of a smoker from that of a non-smoker 71 percent of the time, compared to a ~50 percent (i.e. random) accuracy by human experts," she said.

In addition, while doctors can typically distinguish between the retinal images of patients with severe high blood pressure and normal patients, Google AI's algorithm could go further to predict the systolic blood pressure within 11 mmHg on average for patients overall, including those with and without high blood pressure.

In addition to predicting the various risk factors – age, gender, smoking, blood pressure, etc. – from retinal images, Google AI's algorithm was fairly accurate at predicting the risk of a CV event directly. The algorithm used the entire image to quantify the association between the image and the risk of heart attack or stroke, Peng wrote. "Given the retinal image of one patient who (up to 5 years) later experienced a major CV event (such as a heart attack) and the image of another patient who did not, our algorithm could pick out the patient who had the CV event 70 percent of the time," she said. "This performance approaches the accuracy of other CV risk calculators that require a blood draw to measure cholesterol."

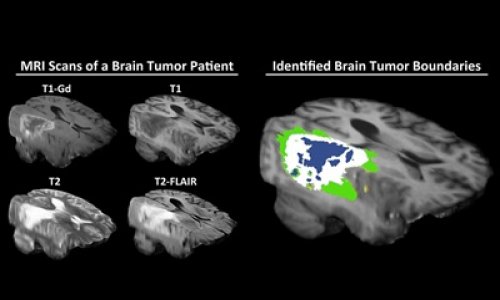

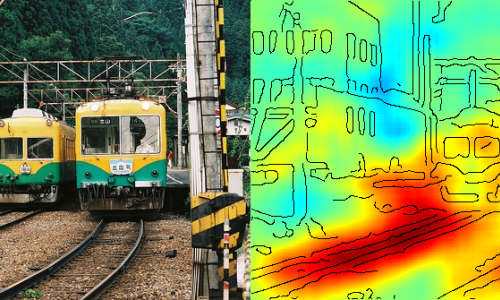

This work may represent a new method of scientific discovery. "Traditionally, medical discoveries are often made through a sophisticated form of guess and test – making hypotheses from observations and then designing and running experiments to test the hypotheses," wrote Peng. "However, with medical images, observing and quantifying associations can be difficult because of the wide variety of features, patterns, colors, values and shapes that are present in real images."

Google AI's approach uses deep learning to draw connections between changes in the human anatomy and disease, akin to how doctors learn to associate signs and symptoms with the diagnosis of a new disease. This, she added, could help scientists generate more targeted hypotheses and drive a wide range of future research.

Source: HIMSS/Bill Siwicki

21.02.2018