© Vadislav – stock.adobe.com

Article • Societal and ethical impacts explored at ECR 2025

How AI is transforming radiology – and radiologists

Patient communication facilitated by chatbots, image quality optimized by machine learning: Artificial intelligence (AI) is entering radiology at breakneck speed, transforming the specialty almost beyond recognition. So, how will the future of diagnostic imaging under AI look like, and which role will humans still play in it? At the ECR congress in Vienna, experts explored the societal and ethical impact of AI on healthcare delivery, patient engagement, and professional practices in radiology.

Article: Wolfgang Behrends

Starting off the panel, Dr Erik Briers pointed out how AI may help empower the patient, allowing them to escape the role of a passive recipient to an active collaborator in the quest to find a solution for their medical problem. Instead of consulting “Dr. Google”, patients are in many cases better off using AI-powered large language models (LLMs), the Vice Chairman of the European Prostate Cancer Coalition (Europa Uomo) found: while a traditional search engine will often provide conflicting and unreliable results, an LLM will synthesize medical information and present it in a way that facilitates understanding. However, neither source should replace professional medical consultation, the expert stressed.

To reap the benefits of AI for their health, patients often enter a relationship of mutual assistance, Briers said: On the one hand, they provide their personal data, which can be used to enhance the diagnostic capabilities of the algorithm; on the other hand, the AI may use this data to provide tailor-made medical advice. This way, many patients contribute to the quality of the AI system so that many more can benefit, he argued. Medical experts also play a vital role in this exchange, as they are needed to annotate the data in a way that the AI can use it in a meaningful way.

Bias reinforcement and energy demand

Despite the immense potential of AI for advancing medical communication, education and workflows in general, the low-threshold nature of LLMs also brings new challenges, cautioned Hendrik Erenstein. For one, the responses provided by the AI may look very convincing at first glance, but still contain inaccurate or incomplete information. The issue of these “confabulations” is further exacerbated by automation bias – the human tendency to accept automated responses without further questioning them. ‘This is a very true issue which we should be aware of,’ the expert from the Hanze University of Applied Sciences in Groningen emphasized.

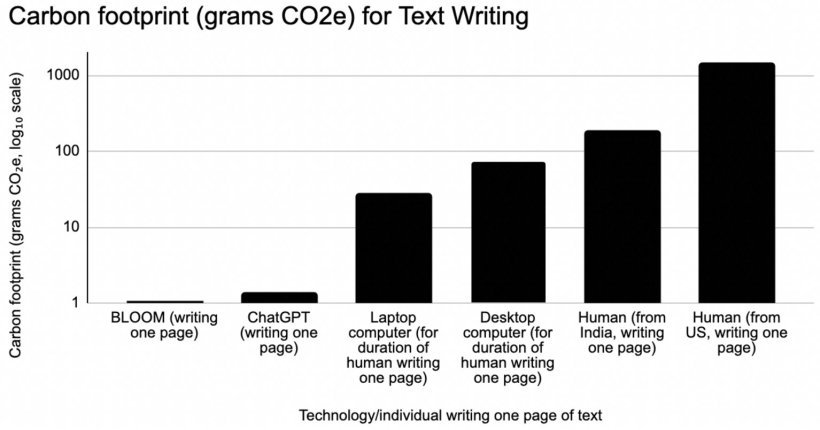

Another important aspect to consider when working with LLMs is sustainability, he continued. While the high energy consumption of these models has attracted criticism, Erenstein advocated for a more differentiated view: While training the models was indeed very energy-demanding, the actual use for text writing has been shown to be more energy-efficient than their human counterpart.1 ‘The scalability of these models is actually what makes them quite accessible,’ he concluded.

Image source: Tomlinson B, Black RW, Patterson DJ, Torrance AW; Scientific Reports 2024 (CC BY 4.0)

Building trust through responsible practice

Following up, Mélanie Champendal made it clear just how profoundly AI will impact the work of radiologists in the future. Referring to recent studies, the expert from the School of Health Sciences in Lausanne explained that AI-based tools can be applied to virtually every stage of the radiology workflow – from patient preparation and planning to image acquisition, processing and reporting.2,3 However, to achieve not only widespread adoption, but also acceptance of AI in radiology, the expert stressed that ‘it is not enough to rely on the technological and organisational levels. Social and human factors must also be taken into account.’ To this end, experts advocate the use of “responsible AI”, which is built around values of equity and fairness, protects data privacy, minimizes bias and provides information transparency and explainability.4 ‘It is a commitment to ethical, inclusive collaboration that builds trust,’ Champendal summarized.

AI is expanding the role of radiographers, and creating new opportunities for specialization and leadership

Mélanie Champendal

For AI to be truly “responsible” in this sense, it must be developed and deployed with respect to the environment, she argued.5 This can be further broken down into two areas: AI sustainability and AI for sustainability. While the former aspect focuses on measures to decrease the carbon footprint of an AI model – by using renewable energy sources or data storage optimization –, the latter explores how the use of AI can contribute to improve sustainable clinical practices. Examples for this include reduced scan times, more efficient stand-by modes for imaging devices, lower contrast agent dosage, or even avoidance of unnecessary scans to decrease energy consumption.6,7

Embracing new possibilities – and new roles

The increasing number of AI applications also sparks discontent among many radiologists, Champendal pointed out. While some might distrust AI-generated results for their lack of transparency, others even fear that the machine will take their job. To differentiate between legitimate and unfounded concerns, the expert advised their colleagues to attain a fundamental level of AI literacy – to better understand what the algorithm can and cannot do.

‘AI is expanding the role of radiographers, and creating new opportunities for specialization and leadership,’ she said. For example, their unique skillset will become increasingly valuable in quality assurance or data management. ‘They play a crucial role in ensuring that AI is safely and effectively integrated into patient care. By embracing these new roles, radiographers can stay at the forefront of imaging innovation. AI should not replace healthcare professionals. Instead, it should empower and support them in delivering better outcomes,’ she concluded.

Profiles:

Dr Erik Briers, MS, PhD, is Vice Chairman and one of the founders of the European Prostate Cancer Coalition (Europa Uomo) in Hasselt, Belgium. He is also member of the European Society of Radiology’s Patient Advisory Group (ESR-PAG).

Hendrik Erenstein is a lecturer-researcher at the Hanze University of Applied Sciences in Groningen, the Netherlands. His research focuses on radiography, radiation safety and AI implementation in diagnostic imaging.

Mélanie Champendal is a radiographer/radiological technologist at the School of Health Sciences (Haute École de Santé Vaud; HESAV) in Lausanne, Switzerland.

References:

- Tomlinson B, Black RW, Patterson DJ, Torrance AW: The carbon emissions of writing and illustrating are lower for AI than for humans; Scientific Reports 2024; https://doi.org/10.1038/s41598-024-54271-x

- Hardy M, Harvey H: Artificial intelligence in diagnostic imaging: impact on the radiography profession; British Journal of Radiology 2020; https://doi.org/10.1259/bjr.20190840

- Nensa F, Demircioglu A, Rischpler C: Artificial Intelligence in Nuclear Medicine; Journal of Nuclear Medicine 2019; https://doi.org/10.2967/jnumed.118.220590

- Walsh G, Stogiannos N, van de Venter R et al.: Responsible AI practice and AI education are central to AI implementation: a rapid review for all medical imaging professionals in Europe; BJR Open 2023; https://doi.org/10.1259/bjro.20230033

- Stogiannos N, Georgiadou E, Rarri N, Malamateniou C: Ethical AI: A qualitative study exploring ethical challenges and solutions on the use of AI in medical imaging; European Journal of Radiology: Artificial Intelligence 2025; https://doi.org/10.1016/j.ejrai.2025.100006

- Jobin A, Lenca M, Vayena E: The global landscape of AI ethics guidelines; Nature Machine Intelligence 2019; https://doi.org/10.1038/s42256-019-0088-2

- Doo FX, Vosshenrich J, Cook TS et al.: Environmental Sustainability and AI in Radiology: A Double-Edged Sword; Radiology 2024; https://doi.org/10.1148/radiol.232030

26.08.2025