© Philips

News • Future Health Index 2025

‘In medical AI we trust’? Many patients disagree

Royal Philips has released its 10th annual Future Health Index (FHI) report, highlighting the growing strain on global healthcare systems.

The FHI 2025 Report, the largest global survey of its kind analyzing key concerns of healthcare professionals and patients, indicates AI holds promise for transforming care delivery. However, gaps in trust threaten to stall progress at a time when innovation is most needed.

“The need to transform healthcare delivery has never been more urgent,” said Carla Goulart Peron, M.D., Chief Medical Officer at Philips. “In more than half of the 16 countries surveyed, patients are waiting nearly two months or more for specialist appointments, with waits in Canada and Spain extending to four months or longer. As healthcare systems face mounting pressures, AI is rapidly emerging as a powerful ally, offering unprecedented opportunities to transform care and overcome today’s toughest challenges.”

Recommended article

Article • Transformative technology

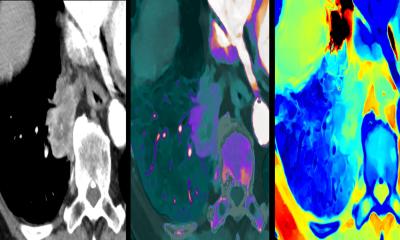

Generative AI in healthcare: More than a chatbot

‘Computer, why did the doctor take that MRI scan of my leg? And what did it show?’: Popularized by OpenAI’s ChatGPT, generative artificial intelligence (AI) is already beginning to see practical applications in medical settings. The technology holds immense potential, with benefits for patients, clinicians, and even hospital administration, according to Shez Partovi, MD.

The FHI 2025 report reveals 33% of patients have experienced worsening health due to delays in seeing a doctor, and more than 1 in 4 end up in the hospital due to long wait times. “Cardiac patients face especially dangerous delays, with 31% being hospitalized before even seeing a specialist. Without urgent action, a projected shortfall of 11 million health workers by 2030 could leave millions without timely care,” Dr. Peron added.

To build trust with clinicians, we need education, transparency in decision-making, rigorous validation of models, and the involvement of healthcare professionals in every step of the process

Gretchen Brown

More than 75% of healthcare professionals report losing clinical time due to incomplete or inaccessible patient data, with one-third losing over 45 minutes per shift, adding up to 23 full days a year lost by each professional. “These inefficiencies amplify stress on already understaffed teams and contribute to burnout,” said Gretchen Brown, RN, VP and Chief Nursing Information Officer at Stanford Health Care. “Recognizing this, as clinicians, we see AI as a solution and understand that delayed adoption can also carry major risks.”

Of the nearly 2,000 healthcare professionals surveyed, if AI is not implemented:

- 46% fear missed opportunities for early diagnosis and intervention

- 46% cite growing burnout from non-clinical tasks

- 42% worry about an expanding patient backlog

While clinicians are generally optimistic, the FHI 2025 report highlights a significant trust gap with patients – 34% more clinicians see AI’s benefits than patients do, with optimism especially lower among patients aged 45 and older. Even among clinicians, skepticism remains: 69% are involved in AI and digital technology development, but only 38% believe these tools meet real-world needs. Concerns around accountability persist, with over 75% unclear about liability for AI-driven errors. Data bias is another major worry, as it risks deepening healthcare disparities if left unaddressed. “To build trust with clinicians, we need education, transparency in decision-making, rigorous validation of models, and the involvement of healthcare professionals in every step of the process,” Brown added.

Patients want AI to work safely and effectively, reducing errors, improving outcomes, and enabling more personalized, compassionate care. Clinicians say trust hinges on clear legal and ethical standards, strong scientific validation, and continuous oversight. As AI reshapes healthcare, building trust is essential to delivering life-saving innovation faster and at scale.

“To realize the full potential of AI, regulatory frameworks must evolve to balance rapid innovation with robust safeguards to ensure patient safety and foster trust among clinicians,” said Shez Partovi, Chief Innovation Officer at Philips. “By 2030, AI could transform healthcare by automating administrative tasks, potentially doubling patient capacity as AI agents assist, learn, and adapt alongside clinicians. To that end, we must design AI with people at the center—built in collaboration with clinicians, focused on safety, fairness, and representation—to earn trust and deliver real impact in patient care.”

Source: Philips

16.05.2025