© Marco2811 – stock.adobe.com

News • A question of liability

Who’s to blame when AI makes a medical error?

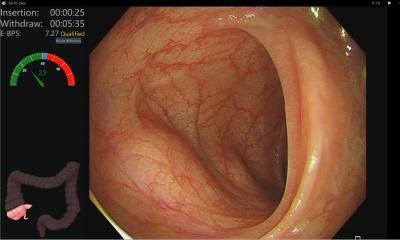

In the realm of gastrointestinal (GI) endoscopy, artificial intelligence (AI) is becoming an essential tool, especially in the computer-aided detection of precancerous colon polyps during screening colonoscopy.

This integration marks a significant advancement in gastroenterology care. However, the inevitability of errors persists, and in some cases, AI algorithms themselves could contribute to medical errors. To address this, physician-scientists at the Center for Advanced Endoscopy at Beth Israel Deaconess Medical Center (BIDMC), in collaboration with legal experts from Pennsylvania State University and Maastricht University, are pioneering efforts to develop guidelines on medical liability for AI use in GI endoscopy.

A recent paper, led by BIDMC gastroenterologists Sami Elamin, MD, and Tyler Berzin, MD, published in Clinical Gastroenterology and Hepatology, represents the first international effort to explore the legal implications of AI in GI endoscopy from the perspective of both gastroenterologists and legal scholars.

Recommended article

Article • Diagnostic assistant systems

AI in endoscopy: helper, trainer – influencer?

Artificial intelligence (AI) is increasing its foothold in endoscopy. Although the algorithms often detect pathologies faster than humans, their use also generates new problems. PD Dr Alexander Hann from the University Hospital Würzburg points out that the use of AI helpers can affect not only the reporting of findings – but also the person making the findings.

Berzin, an advanced endoscopist at BIDMC and Associate Professor of Medicine at Harvard Medical School, has led several of the early national and international studies exploring the role of AI for precancerous colon polyp detection, a ‘level 1’ assistive algorithm. However, AI tools are soon poised to advance beyond just polyp detection and may soon play a role in predicting polyp diagnoses, potentially replacing the need for tissue biopsy in certain cases. The authors suggest that even higher levels of automation are both technically feasible and imminent, potentially providing assisting physicians with automated endoscopy reports and recommendations.

Lead author, Sami Elamin, a clinical fellow in Gastroenterology at BIDMC and Harvard Medical School, used hypothetical scenarios to explore the potential legal accountability of individual physicians or healthcare organizations for a variety of potential AI-generated errors that could occur in the field of GI endoscopy.

The degree of legal responsibility for AI errors, the authors conclude, will depend on how these tools are integrated into clinical practice and the level of automation of the algorithms. To ensure the safety, proper implementation, and monitoring of these AI tools, collaboration among hospitals, medical groups, and gastroenterologists is crucial. Specialty societies and healthcare organizations must establish guidelines for physician oversight of AI tools at various automation levels. For physicians, meticulous clinical documentation—whether they adhere to or deviate from AI recommendations—remains a cornerstone in minimizing liability risks.

Source: Beth Israel Deaconess Medical Center

27.05.2024