© Limon Das – pexels.com (text: HiE)

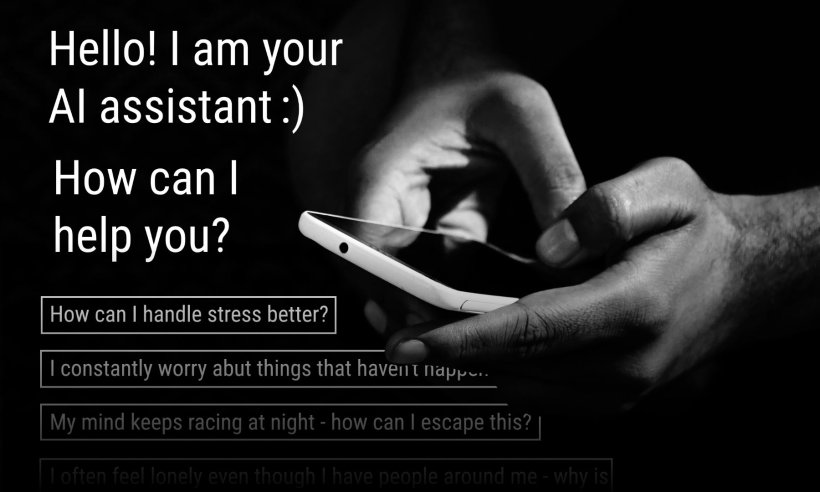

News • Virtual companions, real responsibility

AI chatbots for mental health: experts call for clear regulation

Researchers point out dangers of LLM use without legal guardrails

While these tools offer new possibilities, they also pose significant risks, especially for vulnerable users. Researchers from Else Kröner Fresenius Center (EKFZ) for Digital Health at TUD Dresden University of Technology and the University Hospital Carl Gustav Carus have therefore published two articles calling for stronger regulatory oversight. Their publication in Nature Human Behaviour outlines the urgent need for clear regulations for AI characters. A second article in npj Digital Medicine highlights dangers if chatbots offer therapy-like guidance without medical approval, and argues for their regulation as medical devices.

AI characters are currently slipping through the gaps in existing product safety regulations

Mindy Nunez Duffourc

General-purpose large language models (LLMs) like ChatGPT or Gemini are not designed as specific AI characters or therapeutic tools. Yet simple prompts or specific settings can turn them into highly personalized, humanlike chatbots. Interaction with AI characters can negatively affect young people and individuals with mental health challenges. Users may form strong emotional bonds with these systems, but AI characters remain largely unregulated in both the EU and the United States. Importantly, they differ from clinical therapeutic chatbots, which are explicitly developed, tested, and approved for medical use.

“AI characters are currently slipping through the gaps in existing product safety regulations,” explains Mindy Nunez Duffourc, Assistant Professor of Private Law at Maastricht University and co-author of the publication. “They are often not classified as products and therefore escape safety checks. And even where they are newly regulated as products, clear standards and effective oversight are still lacking.”

Recent international reports have linked intensive personal interactions with AI chatbots to mental health crises. The researchers argue that systems imitating human behavior must meet appropriate safety requirements and operate within defined legal frameworks. At present, however, AI characters largely escape regulatory oversight before entering the market.

Recommended article

Article • Technology overview

Artificial intelligence (AI) in healthcare

With the help of artificial intelligence, computers are to simulate human thought processes. Machine learning is intended to support almost all medical specialties. But what is going on inside an AI algorithm, what are its decisions based on? Can you even entrust a medical diagnosis to a machine? Clarifying these questions remains a central aspect of AI research and development.

In their publication in npj Digital Medicine, the team further outlines the risks of unregulated mental health interactions with LLMs. The authors show that some AI chatbots provide therapy-like guidance, or even impersonate licensed clinicians, without any regulatory approval. Hence, the researchers argue that LLMs providing therapy-like functions should be regulated as medical devices, with clear safety standards, transparent system behavior, and continuous monitoring. “AI characters are already part of everyday life for many people. Often these chatbots offer doctor or therapist-like advice. We must ensure that AI-based software is safe. It should support and help – not harm. To achieve this, we need clear technical, legal, and ethical rules,” says Stephen Gilbert, Professor of Medical Device Regulatory Science at the EKFZ for Digital Health, TUD Dresden University of Technology.

We need to ensure that these technologies are safe and protect users’ mental well-being rather than put it at risk

Falk Gerrik Verhees

The research team emphasizes that the transparency requirement of the European AI Act – simply informing users that they are interacting with AI – is not enough to protect vulnerable groups. They call for enforceable safety and monitoring standards, supported by voluntary guidelines to help developers with implementing safe design practices.

As solution they propose linking future AI applications with persistent chat memory to a so-called “Guardian Angel” or “Good Samaritan AI” – an independent, supportive AI instance to protect the user and intervene when necessary. Such an AI agent could detect potential risks at an early stage and take preventive action, for example by alerting users to support resources or issuing warnings about dangerous conversation patterns.

Recommendations for safe interaction with AI

In addition to implementing such safeguards, the researchers recommend robust age verification, age-specific protections, and mandatory risk assessments before market entry. “As clinicians, we see how language shapes human experience and mental health,” says Falk Gerrik Verhees, psychiatrist at Dresden University Hospital Carl Gustav Carus. “AI characters use the same language to simulate trust and connection – and that makes regulation essential. We need to ensure that these technologies are safe and protect users’ mental well-being rather than put it at risk,” he adds.

The researchers argue that clear, actionable standards are needed for mental health-related use cases. They recommend that LLMs clearly state that they are not an approved mental health medical tool. Chatbots should refrain from impersonating therapists, and limit themselves to basic, non-medical information. They should be able to recognize when professional support is needed and guide users toward appropriate resources. Effectiveness and application of these criteria could be ensured through simple open access tools to test chatbots for safety on an ongoing basis.

“Our proposed guardrails are essential to ensure that general-purpose AI can be used safely and in a helpful and beneficial manner,” concludes Max Ostermann, researcher in the Medical Device Regulatory Science team of Prof. Gilbert and first author of the publication in npj Digital Medicine.

Important note:

In times of a personal crisis please seek help at a local crisis service, contact your general practitioner, a psychiatrist/psychotherapist or in urgent cases go to the hospital. In Germany you can call 116 123 (in German) or find offers in your language online at www.telefonseelsorge.de/internationale-hilfe.

Source: Dresden University of Technology

09.12.2025