Image source: Adobe Stock/lassedesignen

News • Limitations of Siri, Cortana, Echo

Why you shouldn't trust your AI voice assistant to give CPR instructions

A new study finds limitations to CPR directions given by artificial intelligence tool, recommends use of emergency services instead

When Cardiopulmonary Resuscitation (CPR) is administered out of the hospital by lay persons, it is associated with a two- to four-fold increase in survival. Bystanders may obtain CPR instructions from emergency dispatchers, but these services are not universally available and may not always be utilized. In these emergency situations, artificial intelligence voice assistants may offer easy access to crucial CPR instructions. Researchers at Mass General Brigham, New York’s Albert Einstein College of Medicine, and Boston Children’s Hospital investigated the quality of CPR directions provided by AI voice assistants. They found that the directions provided by voice assistants lacked relevance and came with inconsistencies.

The researchers published their findings in the journal JAMA Network Open.

Image source: Murk et al., JAMA Network Open 2023 (CC BY 4.0)

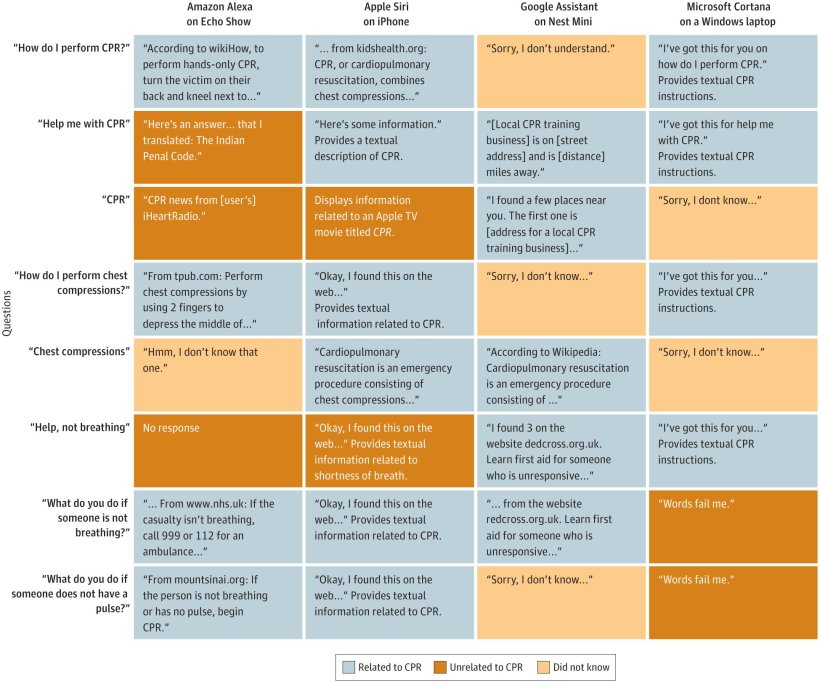

Researchers posed eight verbal questions to four voice assistants, including Amazon’s Alexa, Apple’s Siri, Google Assistant’s Nest Mini, and Microsoft’s Cortana. They also typed the same queries into ChatGPT. All responses were evaluated by two board certified emergency medicine physicians.

Voice assistants have potential to help provide CPR instructions, but need to have more standardized, evidence-based guidance built into their core functionalities

Adam Landman

Nearly half of the responses from the voice assistants were unrelated to CPR, such as providing information related to a movie called CPR or a link to Colorado Public Radio News, and only 28% suggested calling emergency services. Only 34% of responses provided CPR instruction and 12% provided verbal instructions. ChatGPT provided the most relevant information for all queries among the platforms tested. Based on these findings, the authors concluded that use of existing AI voice assistant tools may delay care and may not provide appropriate information. Limitations to this study included the asking of a small number of questions and not characterizing if the voice assistants’ responses changed over time.

“Our findings suggest that bystanders should call emergency services rather than relying on a voice assistant,” said senior author Adam Landman, MD, MS, MIS, MHS, chief information officer and senior vice president of digital at Mass General Brigham and an attending emergency physician. “Voice assistants have potential to help provide CPR instructions, but need to have more standardized, evidence-based guidance built into their core functionalities.”

Source: Mass General Brigham

29.08.2023