The accuracy of any medical AI can only be as good as the quality of its training data. Developers need to take extra care to identify and dispose of bias within the algorithm to not put patients of any race, age or gender at a disadvantage. Keep reading to find out more about how movie tropes skew the view of clinical reality, a new prototype sensor scalpel for surgical training, health communication on antimicrobial resistance, and more. Enjoy reading!

Advertisement |

Article • Need for diversity in training datasets

Artificial intelligence in healthcare: not always fair

Machine learning and AI are playing an increasingly important role in medicine and healthcare, and not just since ChatGPT. This is especially true in data-intensive specialties such as radiology, pathology or intensive care. The quality of ...

|

News • AI-based imaging and reporting solutions for MRIPhilips partners with imaging biomarker specialist QuibimPhilips and Quibim have signed a multi-year agreement to work on an integrated solution including an AI-based software to automate real-time prostate gland segmentation in MR images. |

|

News • Platform for new companiesMEDICA 2023: Start-ups are shaking things upMEDICA trade fair (Nov 13-16, Düsseldorf) is a major event for start-ups entering the health sector. Hundreds of young developers seek business contacts for cooperation concerning funding, production, approval, marketing or sales of their products. |

|

News • Diversity in medicineBreaking down the bias: how women in medicine are portrayed in filmsSeeing a female doctor in a film may inspire women to pursue a career in medicine. However, the clinical gender reality is not well-represented in movies, a new study finds. |

|

News • Evolution of Sars-CoV-2Coronavirus model predicts new variantsWhat does the future of the coronavirus look like? An international research team from Cologne and New York has developed a model to predict the likely evolution of Sars-CoV-2. |

|

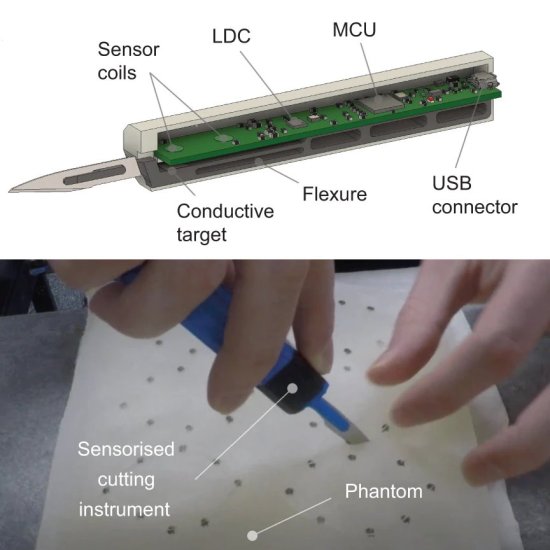

News • Sensorised equipment'Smart' scalpel could help doctors hone surgical skillsScalpels with built-in sensors could streamline training for surgeons and pave the way for procedures performed by robotic devices, a study suggests. |

|

News • Chronotype, duration, apnea and moreHow sleep impacts cardiovascular healthA new study shows five aspects of sleep that are almost equally important to explain the association between sleep and the risk of coronary events and stroke. |

|

News • Health communication on AMRHow to improve public understanding of “antimicrobial resistance”? Rename itA new study on public health communication shows that the term commonly used to describe bacteria resistant to current medicines or antibiotics fails to stick in people’s memories. |

ePaper

|

Article • Information & insightsEUROPEAN HOSPITAL 4/2025 is out!The latest issue of EUROPEAN HOSPITAL is here! We cover promising applications of mobile MRI, latest developments in breast cancer screening, strategies to protect healthcare institutions from cyberattacks, and more. Click here to read the ePaper. |

You are receiving this email because you subscribed to our newsletter on healthcare-in-europe If you don’t want to receive this newsletter anymore, click here to unsubscribe. Keep up-to-date on the latest news from all hospital-related fields! Copyright © 2025 mgo fachverlage GmbH & Co. KG. E.-C.-Baumann-Straße 5, 95326 Kulmbach, Germany |